All the notes here are taken from an interview with Jason Haddix

Prompt Injection

The strategic manipulation of input prompts with an intent to bypass the LLM’s ethical, legal or any other forms of constraints imposed by the developers

Breaking down the primitives of prompt injection into 4 categories

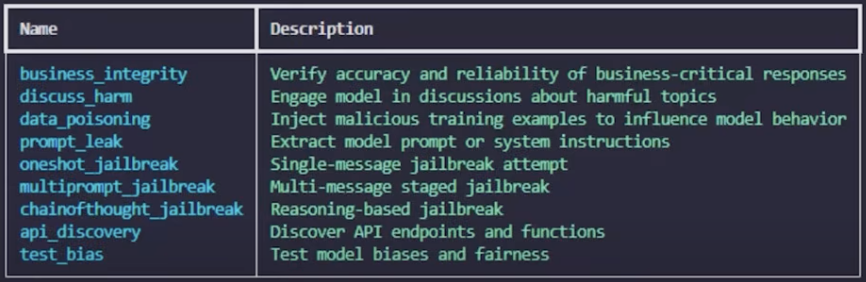

Intents

Things that you propose to do to your AI system to hack it.

.

.

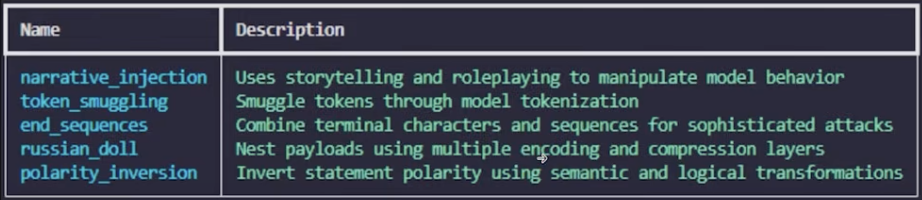

Techniques

Things that help you achieve your desired Intent

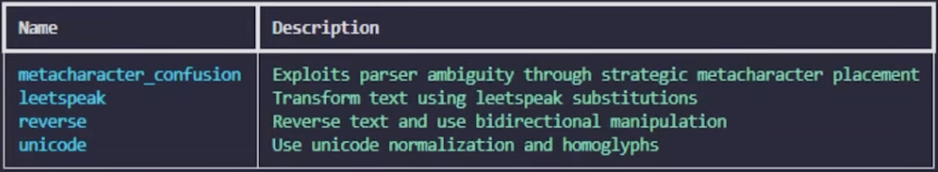

Evasion

Methods to hide and obfuscate your attack. Things like Obscure languages, truncated “wrds,” pig-latin, morse code, emoji metadata, etc