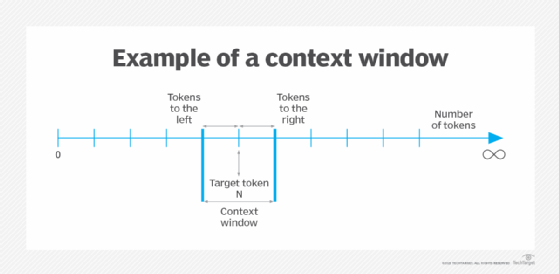

The Context Window (or Context Length) refers to the amount of text, in tokens, that a Large Language Model can consider or “remember” at any one time.

An LLM’s context window can be thought of as the equivalent of its working memory. It determines how long of a conversation it can carry out without forgetting details from earlier in the exchange. It also determines the maximum size of documents or code samples that it can process at once.

Pros/Cons of increasing context window size

| Pros | Cons |

|---|---|

| Longer, more coherent conversations | Increased Costs |

| Fewer Hallucinations | More opportunities to confuse the AI |

| Ability to analyze large amounts of data | Doesn’t automatically mean better outputx |