What can I do with LM Studio?

- Download and run local LLMs like gpt-oss or Llama, Qwen

- Use a simple and flexible chat interface

- Connect Model Context Protocol servers and use them with local models

- Search & download functionality (via Hugging Face 🤗)

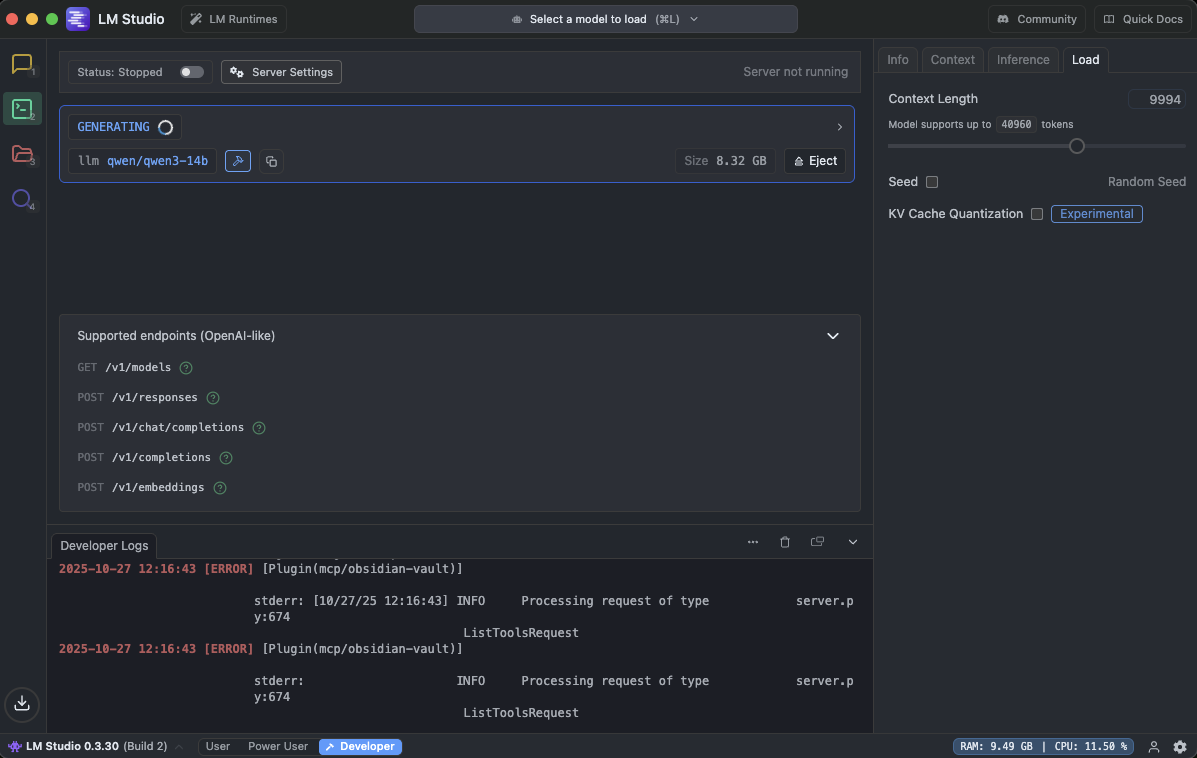

- Serve local models on OpenAI-like endpoints, locally and on the network

- Manage your local models, prompts, and configurations

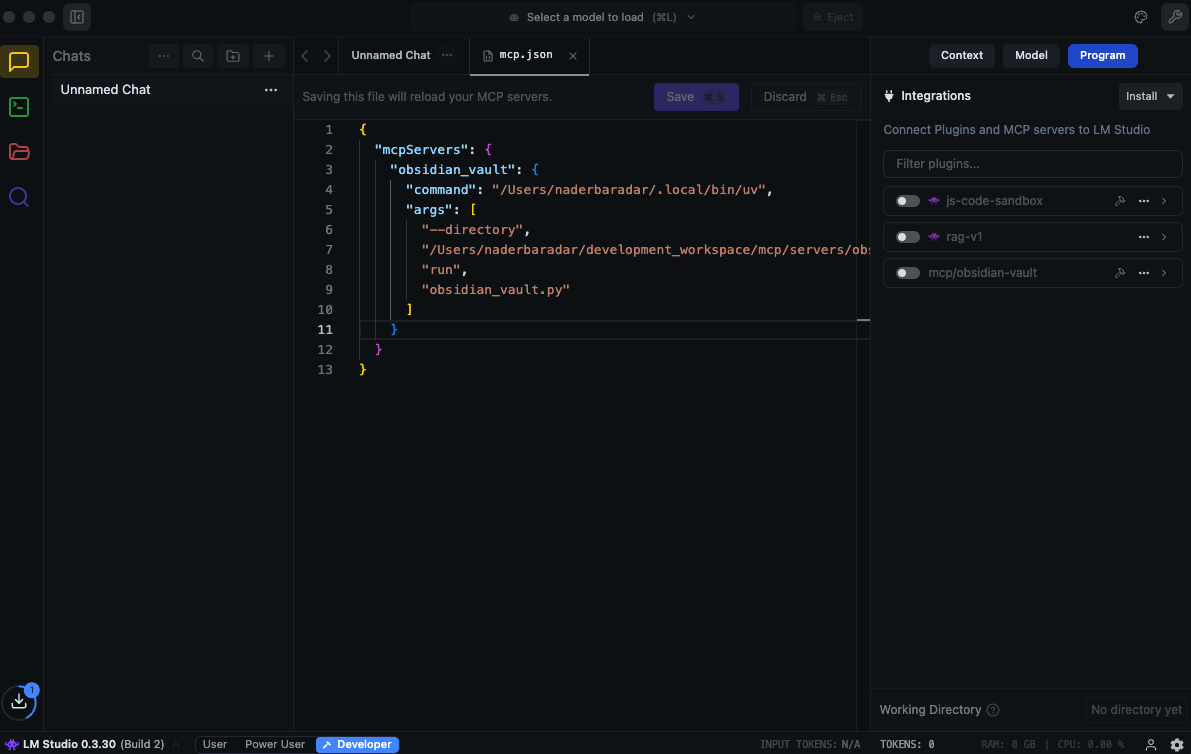

How can I use MCP

https://lmstudio.ai/docs/app/mcp

To add Model Context Protocol Servers

- Click on wrench (top right)

- Click on “Program” tab in the right panel

- Click the “Install” Dropdown to the right of ”🔌 Integrations”

- Update your

mcp.json

How can I increase Context Size

You can increase Context Window size for models by going to the “Developer” view (green button on left panel), the going to the “Load” tab on the right panel